You’ve turned on GA4 BigQuery Export – What now?

Andreas Nguyen Arentoft

Innovation Director

When Google announced their new analytics platform, initially named Web+App, the feature I and many got the most excited about was the native Google BigQuery export which until then, had been locked behind the paid version of Google Analytics. However, as the years have passed and the platform has now (almost) replaced the predecessor, Universal Analytics, marketers are still stumbling to get the most of this powerful feature (at least not without racking up obscene cloud costs).

However, fear not, because not only will I now take you through some of the most frustrating “features” (definitely not bugs) that hurts the usability of the BigQuery export, but we will also go through how we created a solution to all the bigger issues . But before that, what is the Google BigQuery export and why should you use it?

What is Google BigQuery export?

Google’s ecosystem covers many areas, but the trifecta of advertising, analytics and cloud is not only their most profitable business units, but also their most interconnected. With the BigQuery export, you get a native integration between your analytics solutions and a data warehouse solution, meaning you get access to fast, highly customisable and fully-managed data warehouse solutions for your analytics data, which enable analysts to get even more out of your data.

Data-savvy marketers can leverage this integration to build custom audiences, run large-scale product analysis, and deploy advanced advertising evaluation models – all within a fully-managed environment that is natively integrated with both your ad platforms and web analytics. If you want to get ahead in the competitive arena of digital marketing nowadays, this is a must-have. But it is sadly not as easy as this.

What are the limitations of the GA4 BigQuery export?

Using the GA4 BigQuery export can be powerful, but equally frustrating and expensive. The two biggest problems we’ve identified are:

No sessions: One of the major differences between Universal Analytics and Google Analytics 4 is the lack of a concept called ‘Sessions’. This grouping of events was restrictive in a world where events could exist in both Web and App, which meant it had to go in this new world. However, since the export does not group events into sessions, you’ll likely end up running your queries on much more data when you run your reports on the raw GA4 export, since you cannot filter out sessions and events that are uninteresting for you.

No Google Ads data: One of the more odd quirks of the GA4 export is the lack of proper access to Google Ads data if auto-tagging is enabled. If you look at a “Session” from Google Ads, you’ll see it marked as “google / organic” unless you use manual tagging and you’ll be missing campaign details, despite having a GCLID which indicates that the session came from a Google Ads clicks. Google has admitted that this is a bug, but have provided no indication of when this will be fixed. Additionally, this won’t be solved retroactively, meaning all the Google Analytics 4 data you send to BigQuery will remain marked wrongly.

What is the solution to these problems?

At Precis, we work a lot with Google Analytics data and this really caused a lot of headaches. However, with the help of a great open source library and our own data management platform, Bifrost, we’ve found a way to tackle these pesky issues and finally make using GA4 BigQuery Exports a viable analytics tool. So your question must now be – How did we do it?

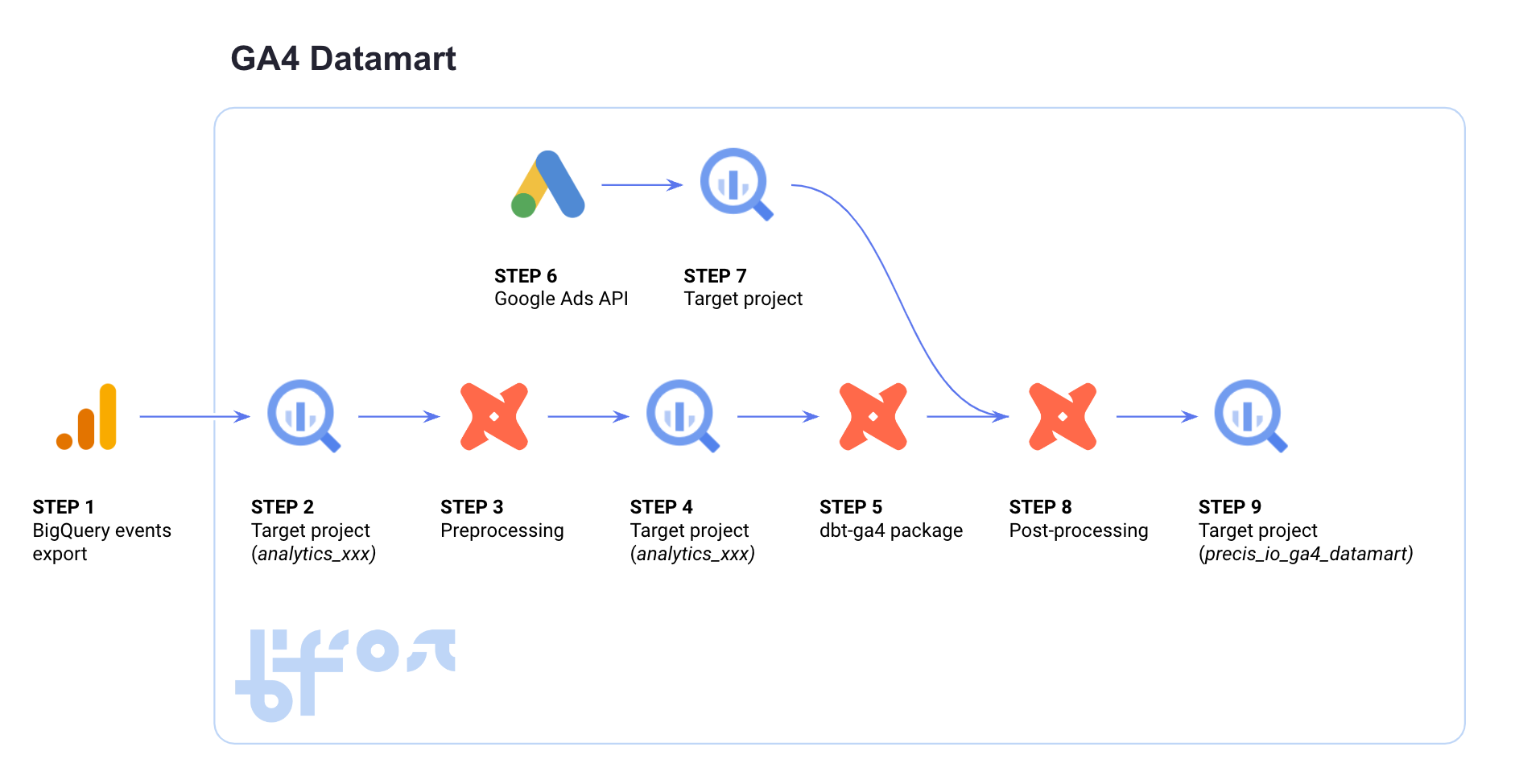

Dataflow chart explaining our solution

To solve the issues, we developed a datamart that leverages the open source library by Velir named dbt-ga4 (link to GitHub repository and a big thanks from Precis!). This dbt package is rapidly becoming the industry standard for making event data queryable like session data, which is great to get uniformity in the industry. But whereas the package make the data easier to manage, it still processes a lot of data if run on your entire GA4 export, so we have made it more user friendly and a viable option by only applying the transformation on smaller batches (7 days) of data ongoingly rather than the full report after the initial backfill (step 3 in dataflow). We then daily appends a staging table with the transformed data thus making sure we always have up-to-date data available while keeping the cost low. Without this, you would be forced to run a very costly operation on all your data every day.

Additionally, our solution fetches your Google Ads data from the Google Ads API and replaces the erroneous/missing data about the campaigns with the data from Google Ads (step 6-8 in dataflow chart). This data is also stored in BigQuery, giving you an additional benefit of having easily queryable Google Ads reports.

Disclaimer: There is currently no way to transform App data into the format that resembles Session data since the concept is not directly applicable. We are working on a fix to this and will include this in any future updates.

As mentioned, the solution is run via Bifrost, our data management system, which is our UI for deploying DBT workflows and managing data integration between ad platforms (plus a few other connections) and Google BigQuery. Having invested in developing Bifrost really paid off when we wanted to scale this solution and made the process incredible smooth and a dream to deploy. However, your companies can hopefully learn from our insight into how the datamart is designed and use it yourself.

Great! But I don’t have the resources to deploy something like this…

The reason we are making a public announcement of this solution is that we want to invite companies to start using it beyond our clients. We know the challenges and did not want to keep this just to ourselves. If you are interested in getting serious about GA4 analysis and would like a headstart by utilising our data management system to run your dbt workflows, just get in touch with us to let us know!