The state of JavaScript SEO 2020

Johan Nilsson

Senior Digital Specialist

JavaScript SEO has been a hot topic in recent years and a lot of things have happened. Google, along with Martin Splitt & Co, have done a great job of catching up and making life a bit easier for SEO specialists. However, there are still some pitfalls to avoid, both for SEO and for Google Ads. This blog post will cover the state of JavaScript and Google Search today and the most common problems we encounter when we consult with our clients around site moves and site re-designs with regards to JavaScript.

Is a client-side rendered (CSR) JavaScript webpage always bad for SEO?

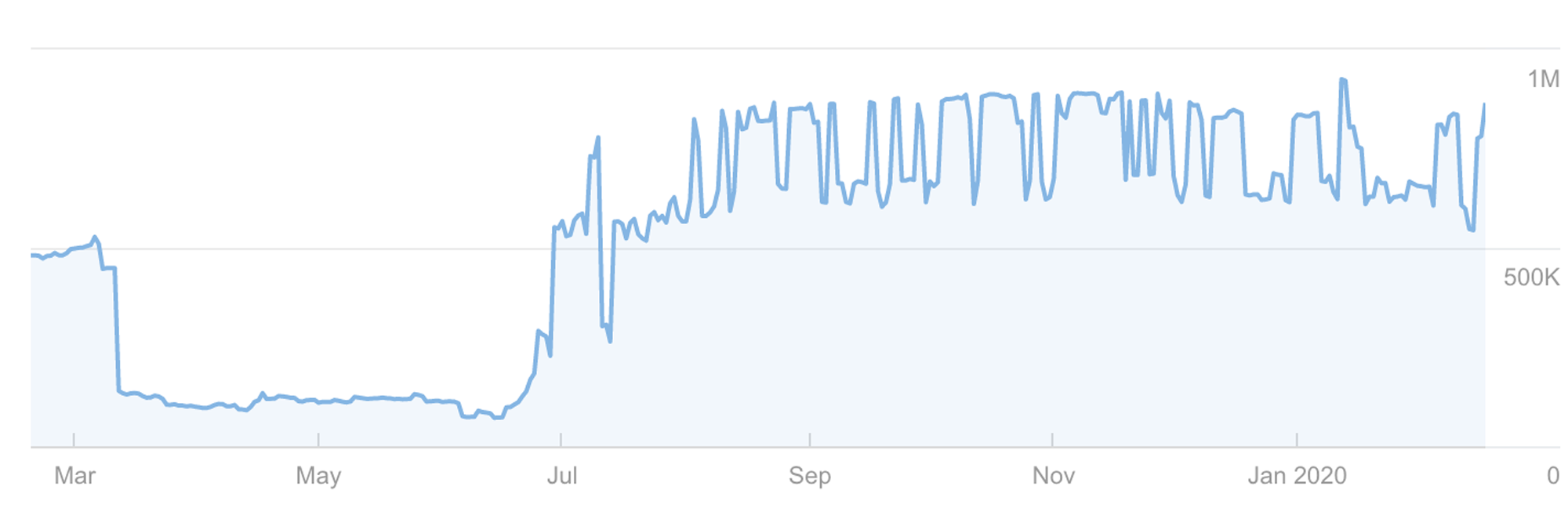

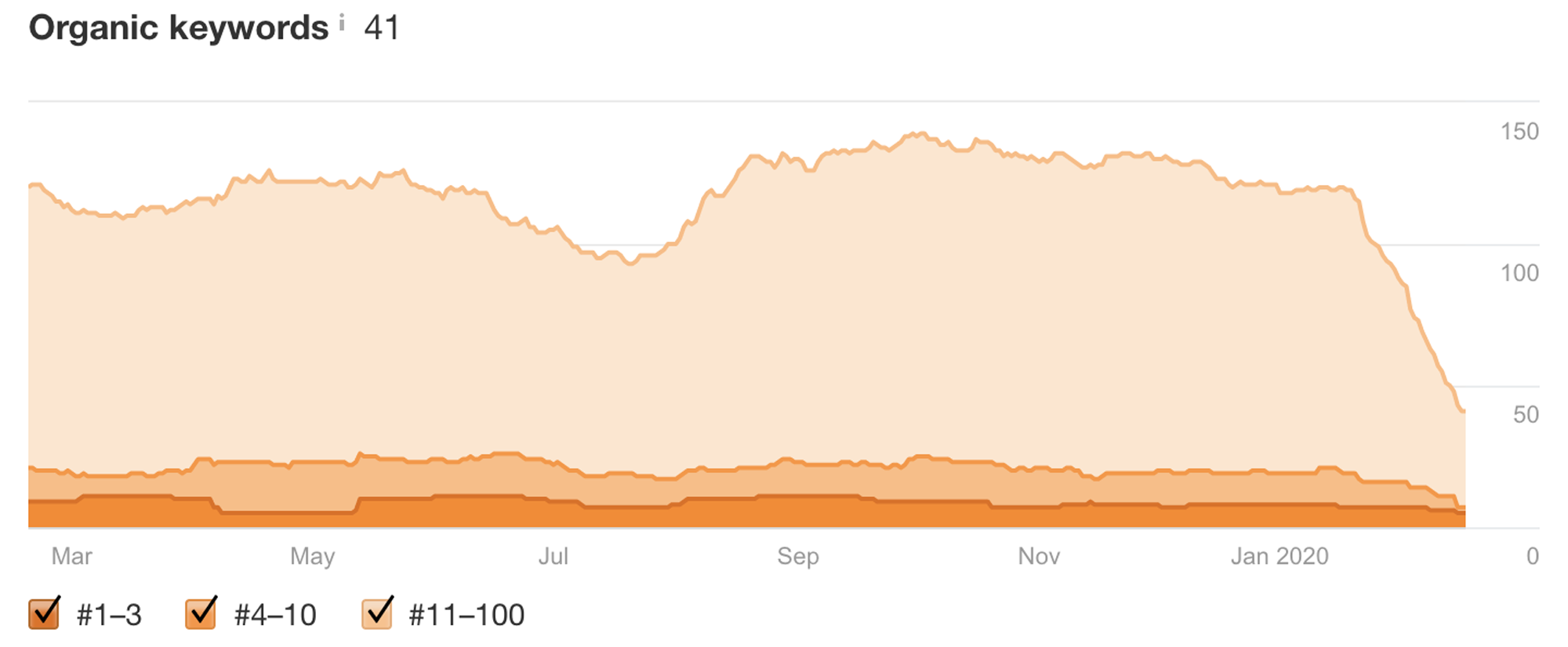

Before we dive into the technical details, let’s answer the question above. You often see case studies where things have gone wrong after site moves when using JavaScript. However, there are still examples of increased organic traffic after a site move. If the code is written according to SEO best practice and tested before launch, the only drawback we know for sure is that you will end up in the two waves of indexing (more on that later), which means that it can take a longer time to get your pages indexed in Google, which is a competitive disadvantage. Take a look at the graphs below with two different outcomes after implementing React with no dynamic rendering. So the answer is that it is not always bad for SEO, but we highly recommend implementing a solution to avoid the two waves of indexing.

Example 1: traffic after site move

Example 2: traffic after site move

Crawling and indexing

The source code is like the recipe for HTML, CSS and JavaScript. While the rendered DOM is the baked cake where your code is executed.

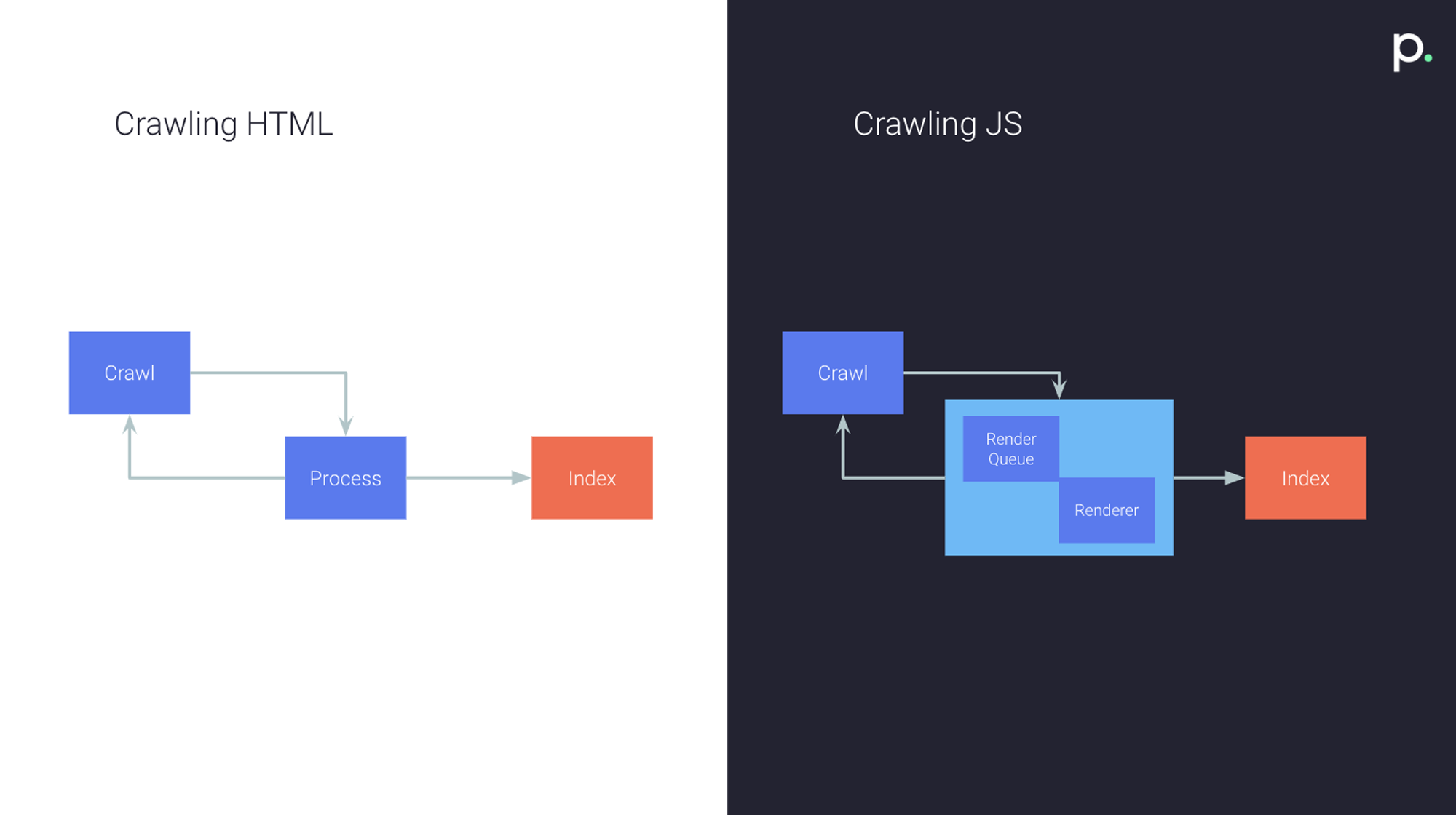

If your source code only contains HTML and CSS, Googlebot can download the HTML file and extract the links from the source code and visit them when the crawl budget allows and GoogleBot sends the downloaded resources to the indexer (Caffeine).

It gets more complicated when it comes to a JavaScript-based site. In this case, GoogleBot needs to involve Google Web Rendering Service to parse, compile and execute the JS code before the indexer can index the content. That is why it is called the two waves of indexing.

“The rendering of JavaScript-powered websites in Google Search is deferred until GoogleBot has resources available to process that content” is a quote from Chrome Dev Summit 2018. They also said that it can take up to a week before the render is complete. Our experience is that it can take longer than that, with times up to 1 month reported. Google is working on a more instant rendering service, but in the meantime, if you are a news publisher or other business that requires pages to be indexed quickly, it is highly recommended to use one of the other available options such as; Server Side Rendering (SSR), Isomorphic JavaScript or Dynamic rendering. By using one of these options, you will avoid going through the two waves of indexing.

Evergreen GoogleBot

Google announced in May 2019 that GoogleBot will run the latest version of Chromium.

Googlebot ran on Chromium 41 between 2015-2019 which was a big problem for websites with modern JS frameworks. ES6 was only partially supported and interfaces like IndexedDB and WEBSQL were disabled.

Luckily with the evergreen GoogleBot, the problems mentioned above are a thing of the past. Good job Google!

Rendering

For a SEO specialist, it is good to have some general knowledge about the different rendering solutions. It is important to understand how the different solutions impact crawling and indexing. I will not go in-depth on how this works technically but provide a brief overview with React as the framework.

CSR (Client-side rendering)

- The server sends a response to the browser

- The browser downloads JS

- The browser executes React

- The page becomes viewable and interactable at the same time.

Pros:

- The website feels really fast after the initial page load (remember that GoogleBot does not “interact” with your page, it downloads every document as it were the first visit.)

- Great for web apps

Cons:

- The initial load might take a longer time

- Slower indexing

SSR (Server side rendering)

- The server sends ready to be rendered HTML response to the browser.

- The browser renders the page (now viewable) and the browser downloads JS.

- The browser executes React and the page becomes interactable.

Pros:

- Initial page loads fast (Good for SEO and user experience)

- Faster indexing

Cons:

- Frequent server requests

- Overall slower page rendering experience as the full page reloads when you visit another page

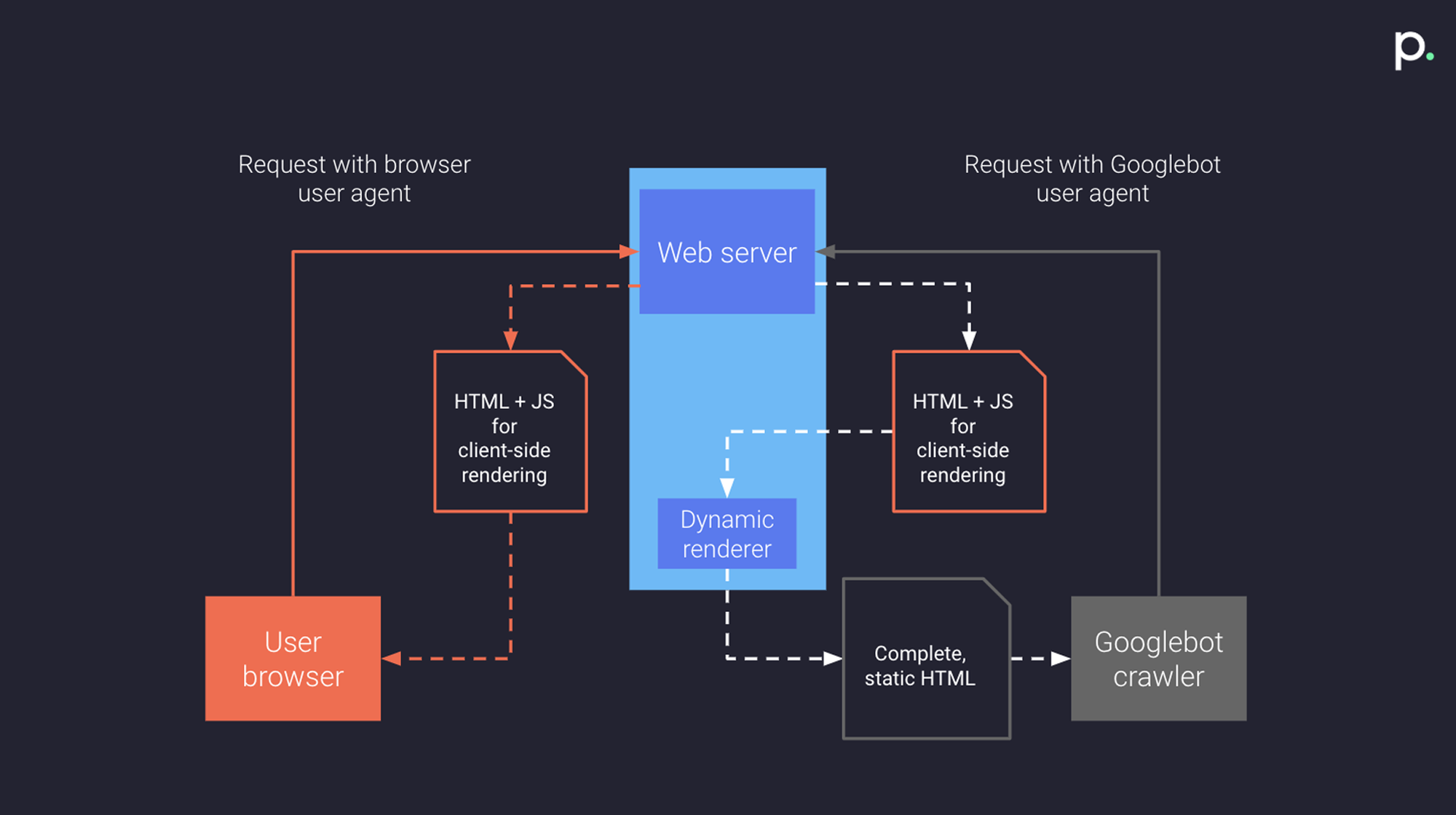

Dynamic Rendering

With this solution, you switch between client-side rendered content and pre-rendered content depending on the user agent. A normal user will get the HTML and JS for client-side rendering, just like with CSR and GoogleBot will get complete static HTML.

Solutions: Rendertron, Puppeteer, and prerender.io.

Pros:

- Faster indexing

- You only serve rendered HTML to bots

Cons:

- Creates another layer of complexity. In many cases, you need to keep track of two environments.

- If the pre-rendering fails and you don’t have a framework set up and routine to monitor it, you might end up serving GoogleBot with empty/incomplete documents.

Isomorphic JavaScript:

The code can be run on both the server and the browser. The first request is processed by the server (rendered HTML) while subsequent requests are processed by the client in the browser. In other words, you get the first page in rendered HTML from the server and the rest of the functionality is client-side rendered. With this solution, you get the best from both SSR and CSR.

Pros:

- Faster indexing

- Fast load time

Cons:

- Can be hard to implement

- Requires certain frameworks

- This leads to higher server-load than pure CSR

Pitfalls

This is a sample of problems we have encountered in relation to JavaScript SEO and site moves, not a complete list. If it impacts Google Ads in some way, it will also be added here.

1. Sneaky 404:s

With Single page applications, it can look like the page is working, but if you check the status code it will show 404.

SEO problems:

Deindexing and decline of traffic because GoogleBot thinks that the pages are not working as they respond with 404.

Increased organic traffic after the fix of sneaky 404:s in June.

Google Ads problems:

Ad disapproval because the Google Ads Bot thinks that the pages are not workingLower Quality Score if you need to pick a non-optimized landing page for the keyword instead

How to detect it:

URL inspection tool in Google search consoleThe console in Chrome

How to fix it:

https://web.dev/codelab-fix-sneaky-404/

2. Soft 404:s

A soft 404 is basically a page with an error like ‘not found ‘ with a server response of 200. This can be particularly tricky if you have a single-page application.

SEO problems:

Error pages can be indexed and may show up in the search results

Google Ads problems:

The automatic disapproval of ads with 404 final url:s may not work and you will send traffic to broken pages

How to detect it:

URL inspection tool in Google search console

How to fix it:

https://developers.google.com/search/docs/guides/fix-search-javascript

3. Lazy loading

If the lazy loading functionality is built on scroll events, the content that you lazy load will not be indexed by Google. GoogleBot does not interact with the webpage, rather, it renders your page with a tall viewport. If you lazy load the content, whenever it is visible in the viewport you are fine.

SEO problems:

Lazy loaded content will not be indexed

Google Ads problems:

I have no experience, in this case, maybe worse landing page experience if key content is lazy loaded with scroll events?

How to detect it:

If it is text, do a site search in google like this to see if it is indexed —> site:mywebpage.com/pageiwanttotest “text i want to see if it is indexed”. Use this Puppeteer script for images.

How to fix it:

https://developers.google.com/search/docs/guides/lazy-loading#load-visible

4. No unique URL:s for content you want to be indexed and ranked

If you perform a site move, make sure you use the same URL or equivalent and do a 301-redirect.

In this case, the site had a knowledge section with unique URL:s for every topic. After the site move the site updated the content with JavaScript instead of linking out to a new URL with the content.

SEO problems:

Losing ranking on many keywords.

Ranking on fewer keywords after site move

Google Ads problems:

This is not a problem directly associated with the example above but if you rely on automatic item updates in Google merchant center to update availability it can be a problem if the sizes don’t have unique URL:s, as Google uses the information in the structured data for this. Let’s say you sell a yellow dress in 5 sizes and only XL is in stock. In many cases, the availability is set to in stock in the structured data as long as one size is in stock for the URL with the yellow dress. Then Google will override the correct availability attribute [out of stock] to [in stock] in Google merchant center for the dresses in size S-L, that are set to out of stock.

In this case you need to turn automatic item update for availability off or create individual url:s for every size and canonicalize to the main product url. Otherwise you risk getting a warning for inaccurate availability status (due to inconsistent availability status between the feed and the landing page)

5. Exclude “out of stock” in a dynamic search ads campaign

This is a problem that is specific for dynamic search ad campaigns and not SEO.

Google recommends excluding pages that contain out of stock. The problem with JavaScript web pages is that the source code can contain “in stock”, “out of stock” and “preorder”. The exclusion functionality searches the source code for the string, not the rendered HTML which can lead to exclusion of all product pages. “Source code contains” is a better name compared to “page contains”. Make sure to check the source code before you enter the string to exclude and follow up on what happened the day after.

A solution to this is to use a page feed and feed in URL:s based on the availability in the Google merchant center.

General recommendations

Involve SEO early in the site move project

It is important to involve a SEO specialist as early as possible in a site move project. Good developers need good SEOs and good SEOs need good developers. If you decide to go with React, Angular2, or any other framework with CSR, consider implementing one of the three alternatives mentioned above to avoid the two waves of indexing. It is often more expensive to fix something that is already launched compared to making it right from the beginning.

Test before launch

Test before you go live. URL inspection tool is a free tool in Google search console which you can use to test if Google can render your website properly. If the URL is behind a firewall or hosted on a local computer, you can use this solution to test it.

Don’t forget about Google Ads, make sure everything will work as intended here as well.